Continuing on the articles on whats new in python 3.11, today we will look at an nice improvement in this version: faster runtime.

There have been many changes made to the interpreter that make it faster, but in this article we will focus on one of the interesting changes - a specialising adaptive interpreter.

What is bytecode?

Before we go into how the interpreter works, let us take a moment to understand bytecode.

Unlike a pure interpreter, python actually compiles the code into a form called bytecode. In static compiled languages like Java, which also compiles to bytecode, the compilation is done prior to running the code. Python, by contrast, does the compilation at runtime when the module is loaded for the first time.

So what is bytecode?

Before answering that question, let us take a step back and understand machine code. Machine code is the code that is actually executed by the chip or processor in the computer. The processor defines certain codes for different actions and when those codes are supplied to the processor then the corresponding action is executed by the processor.

Traditional compilation, like compiling a C program takes the high level code written in the programming language and converts it into the equivalent machine code instructions that the processor can execute. When you run the program, these instructions are sent to the CPU and the program gets executed.

The problem with this method is that you have to compile the program separately for every processor or hardware that you want to run the code on, as each one might define a different set of machine code instructions.

To get around this problem, language designers came up with the concept of bytecode. Bytecode is a hardware neutral set of instructions that are higher level than machine code. Your program would get compiled into bytecode and then another software, would execute the bytecode and cause the program to run. Since the bytecode is independent of hardware, the code can be run on any hardware for which that software is available. This is how a language like Java works, for example.

For python, both the compilation of python code to bytecode, as well as running the bytecode instructions are done at runtime by the python interpreter.

Here is an example

Create a file called util.py with this code

def add(a, b):

return a + bThe create another file test.py in the same directory with this

from util import add

print(add(3, 5))Then run the file test.py on the command line

> python test.pyCheck the files in the directory, and you should now see that a file __pycache__/util.cpython-311.pyc has got created

This .pyc file, which stands for python-compiled is the output of the compilation process. It contains the bytecode instructions for util.py. Next time we run test.py again, python will not compile again, it will just execute the bytecode directly from the .pyc file.

An Example of Python Bytecode

So what is the bytecode that python has compiled? We could open up the .pyc file, but that will just give us a bunch of bytes and unless we look up the bytecode instructions it will not make any sense.

Instead, python has a nice package to do this. It's called dis. Lets take a look

import dis

def add(a, b):

return a + b

dis.dis(add, adaptive=True)This will print out the bytecode using human readable instruction names. The output should be like this

1 0 RESUME 0

2 2 LOAD_FAST 0 (a)

4 LOAD_FAST 1 (b)

6 BINARY_OP 0 (+)

10 RETURN_VALUEAccording to this, the code return a + b on line 2 of our function has been converted to four bytecode instructions. The first one loads the parameter a, the next one loads the parameter b, the third one does the + operation and the last one returns the value.

Specialising Adaptive Interpreter

The bytecode BINARY_OP 0 (which represents the + operation) is a what is called a generic bytecode instruction. After all, we can also call add("abc", "xyz") and that is totally valid code for a dynamic language like python because strings also support the + operation. So BINARY_OP will work with any data types and do the required calculation.

Now then, let us run this code. Here we call add ten times adding 3 and 5

for i in range(10):

add(3, 5)

dis.dis(add, adaptive=True)Note the output this time

1 0 RESUME_QUICK 0

2 2 LOAD_FAST__LOAD_FAST 0 (a)

4 LOAD_FAST 1 (b)

6 BINARY_OP_ADD_INT 0 (+)

10 RETURN_VALUEIn particular, the bytecode BINARY_OP had been replaced with the bytecode BINARY_OP_ADD_INT. This is an optimised version of + for adding two integers together, which bypasses many of the checks that are usually done, for example a check to see it the data types support the + operator, locating the __add__ method etc.

BINARY_OP_ADD_INT is an example of a specialised bytecode instruction. It works only for adding two integers and it highly optimised for doing that specific operation.

What has happened here is that the python runtime has noticed that we are calling + repeatedly with two integers and therefore – at runtime – it changes the bytecode and specialises it. Everytime in the future, it will run the optimised bytecode and the code will run faster.

This is the specialising adaptive interpreter in action. The interpreter analyses the execution of bytecode. If it sees certain patterns that can be optimised, then it changes the bytecode to the specialised version at runtime.

What happens if we run the function with strings? Let us try this

add("abc", "xyz")

dis.dis(add, adaptive=True)Note that the code does not give an error. You can still call add with strings and it will still work.

The bytecode is also still the same.

1 0 RESUME_QUICK 0

2 2 LOAD_FAST__LOAD_FAST 0 (a)

4 LOAD_FAST 1 (b)

6 BINARY_OP_ADD_INT 0 (+)

10 RETURN_VALUEIn this case, the specialised bytecode will fall back to the generic version because it didn't get two integers as input. But the interpreter will take this call with two strings as an exception and retains the same bytecode. It is still expecting in the code to be called with two integers in the future.

Now, if we were to add strings a hundred times

for i in range(100):

add("abc", "xyz")

dis.dis(add, adaptive=True)We see that the interpreter has now changed the bytecode to BINARY_OP_ADD_UNICODE which is the specialised bytecode to add two strings

1 0 RESUME_QUICK 0

2 2 LOAD_FAST__LOAD_FAST 0 (a)

4 LOAD_FAST 1 (b)

6 BINARY_OP_ADD_UNICODE 0 (+)

10 RETURN_VALUESo thats the idea. The specialised adaptive interpreter will look for patterns and specialise the bytecode so that future executions can run on an optimised path. There are various bytecodes like BINARY_OP which are called "adaptive instructions" and they can be specialised at runtime.

The specialist tool

There is a nifty tool that you can use to visually see where the code is getting specialised without having to dig around in the bytecode. It's called specialist and you can get it from pypi

> pip install specialistLet us see it in action. Put this code in test.py

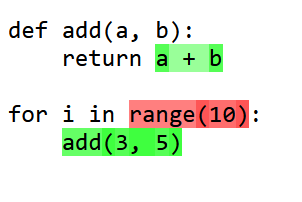

def add(a, b):

return a + b

for i in range(10):

add(3, 5)Then go to the command line and run

> specialist test.pyspecialist will run the script with python, then analyse the bytecode to calculate which lines were specialised. It will then open a web browser with the output. You should see something like this on your screen

The lines highlighted in green were successfully specialised. You can see that apart from a + b even the function call add(3, 5) was specialised.

Code in red was available for specialisation, but it didn't get specialised. In this case, range(10) was executed only once, so it never got specialised. Had that code executed more often (in python 3.11, the threshold is eight times) then it would have been specialised.

The remaining code in white cannot be specialised.

This is a pretty nice way to see what the specialising adaptive interpreter is doing to your code, and provides a new tool for optimising performance bottlenecks.

Summary

In order to take maximum advantage of this new interpreter code, you may want to use the specialist tool to verify that any time sensitive code or performance bottlenecks are getting successfully specialised so that they get the speed boost from the new interpreter.

Python 3.11 is about 10-60% faster than Python 3.10. Specialising Adaptive Interpreter is not the only change. There have been various other speedups that have been incorporated into Python 3.11 as well, and we will look at them in future articles.

Did you like this article?

If you liked this article, consider subscribing to this site. Subscribing is free.

Why subscribe? Here are three reasons:

- You will get every new article as an email in your inbox, so you never miss an article

- You will be able to comment on all the posts, ask questions, etc

- Once in a while, I will be posting conference talk slides, longer form articles (such as this one), and other content as subscriber-only